Statsb ? column

The ? column is used to flag cognitive items which may have a problem.

The screen snapshots seen below were taken while using the Excel 2010 version of Lertap.

An item's distractors will enter in the ? column when the distractor is not selected by anyone, or when it is selected by students with above average proficiency.

"Above average proficiency" means that the students selecting the distractor had an average test or criterion score which was above the mean of all the students who sat the test. (Note that the criterion score may be an external one.)

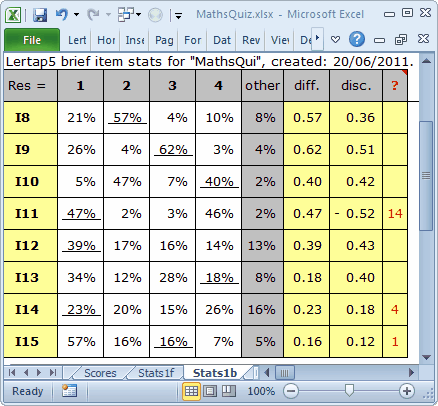

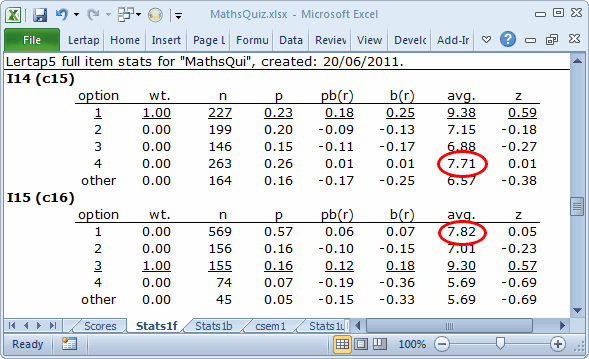

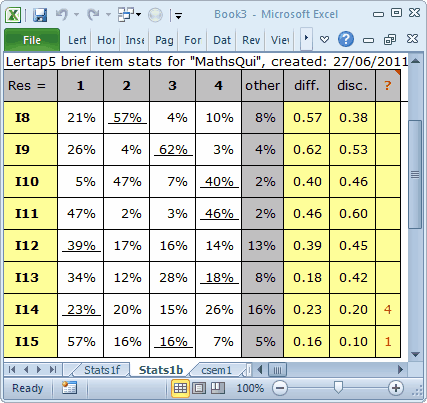

Let's look at items I14 and I15 in the Stats1f report:

The last option for I14, 4, was a distractor, an incorrect answer (the right answer or answers to an item are always underlined; options with no underlining are the distractors).

This option was selected by 263 students. The average criterion score for these students was 7.71, as seen under the avg. column. This was above the average criterion score for all students, which was 7.67, as pictured below.

On I15, the Stats1b report flagged the first option. Stats1f shows that this distractor was selected by 569 students, and their avg. score was also above the criterion average.

Keep in mind that these are just flags, notes created by Lertap to suggest that something might be amiss. We usually do not want distractors to be selected by above-average students. When they are we may have some reason to suspect "ambiguity" -- the wording of the distractor may need to be improved. In some cases a decision may be made to "double-key" an item, that is, to score the item in a manner which gives points for more than one answer. In Lertap this is done with a *mws line; an example which uses *mws lines may be found towards the bottom of this topic.

An item's keyed-correct answer (or answers) will enter the ? column when it has been selected by students whose average criterion score is below average.

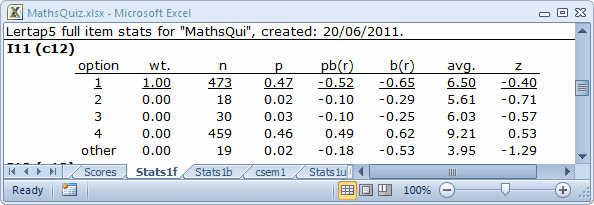

Look now at I11:

The Stats1b report flagged two of this item's options, 1 and 4. The first of these, 1, is the keyed-correct answer, selected by 473 students whose average criterion score (avg.) was 6.50, well below the overall criterion average of 7.67. Option 4, a distractor, was selected by 459 students, and their avg. score was well above the overall criterion average of 7.67.

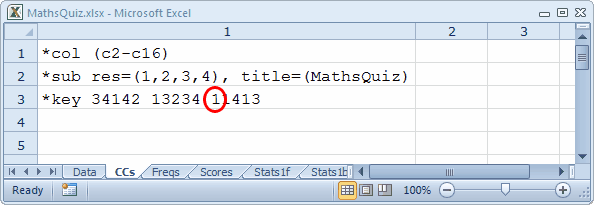

I11 has been mis-keyed. An error was made when the *key line for this test was entered in the CCs worksheet:

This sort of error is easy to fix. In this case, we'd change the 1, circled in red, to 4. After doing so, and after once again running the Interpret and Elmillon options, I11's entry in the Stats1b report was clear of flags in the ? column:

Correcting mis-keyed items should increase the test's reliability estimate, which it did for the test featured here: the reliability went from 0.68 to 0.80.

Related tidbits:

Flags are also waved in Stats1f reports, where they appear in the right margin. Read more.

How to print Lertap's reports? Not hard at all, especially if you take in this topic.